Fabric Capacity Control Panel: Control and Manage All Fabric Capacities in one centralized panel

- Vahid

- Jul 9, 2024

- 12 min read

Updated: Jul 12, 2024

Microsoft Fabric provides essential capabilities such as pausing and resuming to save costs during downtime and scaling for adjusting capacity size as needed. Managing these operations efficiently is crucial for optimizing resource usage and maintaining operational flexibility. In this blog post, I'll show you how to create a single control panel (Report with Buttons) using Power BI and Power Automate for managing all your Fabric Capacities without the need to individually navigate the Azure Portal for each capacity.

Videos:

Part 1 | Part 2 |

Here are the key steps to create this control panel:

Set up a Power BI Streaming Dataset to store Fabric Capacities information.

Develop a Power Automate flow to scan your Azure Subscription, find all Fabric Capacities, and save their details into the Dataset.

Create two buttons and corresponding flows to pause or resume selected Fabric Capacities.

Create a button and Flow to dynamically scale up or down selected Fabric Capacities.

The result will be a Power BI report that includes four buttons, tables, and slicers to manage all your fabric capacities. This report can be created and stored in any type of workspace within the Power BI Service, like below:

Advantages of using this solution: 1- Controls all capacities in one centralized panel

2- No need to open and use Azure Portal

3- Streamline Fabric Admin tasks

4- Keep the log of all capacities

5- Resume capacities

6- Pause capacities

7- Scale up/down

Overall Structure:

So let's create the solution.

Set up a Power BI Streaming Dataset

Click on the New button and select Streaming Dataset in your Power BI Service workspace.

Make sure you create this dataset outside of workspaces with Fabric Capacity, because if you pause the capacity then items in related workspaces won't work.

In the New window, select API and click Next. Add a name for your Dataset and set up columns as follows:

Capacity Name - Text

SKU - Text

State - Text

Resource Group - Text

Date/Time - DateTime

Make sure to turn on the Historic data analysis and then click on Create.

Create a Power BI report using the Streaming Dataset

Open Power BI Desktop and connect that to the streaming dataset you have created in the previous step. Create a Table visual using all columns from that streaming dataset. At this stage, there is no data in the dataset, so your table visual will be blank.

First Flow: scan your Azure Subscription, find all Fabric Capacities, and save their details into the Dataset

The First flow is to scan your Azure Subscription, find all Fabric Capacities, and save their details into the Dataset. Add a Power Automate visual into your report.

The output of this visual is a button which will trigger a flow. When you add that visual to the report, you can drag data fields (measure, Column) to the values section of it and then you can click on ... menu of that visual and create your flow in Power Automate. Once your flow is ready, you can apply it to the button.

For this flow no data needed so click on edit and then in the Power Automate page click on New and select Instant cloud flow. The trigger for all Power Automate visuals is Power BI button clicked and that's has been already added to the flow by default. click on the new step and search for Azure Resource Manager actions group, find List resources by subscription action and add it as the first action to the flow.

Set this action as follow:

Subscription: select your subscription in this field

Click on the Show Advanced Options

Add "resourceType eq 'microsoft.fabric/capacities'" into the filter section

Note: We only need Fabric capacities as the result of this action and if you don't add the filter then the result will show all resources like SQL, Storage accounts, etc.

For more info about how to set the filter, read this: https://learn.microsoft.com/en-us/rest/api/resources/resources/list?view=rest-resources-2021-04-01#uri-parameters

The result of this action will show:

ID: The main details of the resource, like Subscription ID, Resource Group, Providers, ...

Name: Name of the capacity

Type: this will be "Microsoft.Fabric/capacities" as per the filter we set

SKU Name: The current SKU name of the capacity like F1, F4, ...

Location: Current location of the resource like australiaeast

Tags: list of tags if you added to your capacities (This is a good option to set filters if you have many capacities)

Most of required information are in the result of this action and only missed info is the state or the current status of the capacities (Active or Paused). So we need to add Read a resource action from Azure Resource Manager actions group to find that missed part.

Choose the same subscription as the previous action in the first field, then skip the resource group field for now and return to it after setting the other fields.

In Resource Provider search for Fabric and select Microsoft.Fabric, then in Short Resource ID type "capacities/" and then click on flash icon to add a dynamic value and in the list, select Name from the previous action. When you add this item, the flow will automatically create a loop (Apply to each) and keep your action inside that because you might have more than one capacity and then it needs to run this action for each of them to find the status one by one. Add 2023-11-01 as the Client Api Version, and finally. we need to add Resource Group and we can't select one group from the list because there might be different capacities in different resource groups, so we need to make that dynamic by writing a custom formula (expression). Click on the drop down list and select Custom value, then click on the fx button and add this expression:

split(items('Apply_to_each')?['id'], '/')[4]This expression will find the resource group for each capacity from the ID field of the previous action.

The output of this action has a section named Properties and inside that we can find the state of the capacity.

Now, we have all required main information about our Microsoft Fabric capacities and need to save them into the Streaming Dataset (Semantic Model 😕).

Click on the new step inside the loop after the previous action, search for Power BI action group, and select Add rows to a dataset.

In this action, select the workspace and dataset name (the streaming dataset we created before for this solution), the table name is always RealTimeData and then all columns will be displayed. Set the columns as below: Capacity Name: Select dynamic value - Name from the Read a resource action

SKU: Select dynamic value - SKU Name from the Read a resource action

State: need to write expression to extract it from the properties section of the Read a resource action output. add this:

outputs('Read_a_resource')?['body/properties'].stateDateTime: You can select Timstamp as a dynamic value from Power BI trigger but that will be in UTC time, or write an expression like this to convert it to local (Australia/Sydney):

convertFromUtc(triggerOutputs()['headers']['x-ms-user-timestamp'],'AUS Eastern Standard Time')Resource Group: Same as before add this expression:

split(items('Apply_to_each')?['id'], '/')[4] Make sure to add a good name to your flow and then click on Save and Apply, and Return to the Power BI report. Your first button is ready 🎉

Click on the button to test it and refresh the visuals to see the result. For this scenario, we use real-time datasets, so the data will be displayed when you refresh the visuals, without needing to refresh the data model. This scenario saves all records after every action, which is a great way to keep logs.

Add two buttons and corresponding flows to pause or resume selected Fabric Capacities

Using the previous button and flow, you will find all capacities, and now you can add two new buttons to change their state from pause to resume or vice versa.

To be able to select which capacities we want to apply new actions (Resume, Pause, Scale) on, add a slicer with Capacity Name column from the streaming dataset table to the report before adding the buttons.

Resume Flow and Button:

add Power Auotmate visual to the Power BI report and this time we need to add 2 columns to this visual to be able to use those columns' data in the Power Automate flow.

We need to know which capacities have been selected to change their status, perhaps one, multiple, or all of them, so we should add Capacity Name and Resource Group columns to the Power Automate Data selection as below.

Then click on ... and select Edit. In the Power Automate page, click on New at the left top section and select Instant Cloud Flow.

The trigger is same as before, click on the next step and from Azure Resource Manager, select the Read a Resource and set that as below: Subscription: Select the same subscription same as previous section.

Resource Group: Select Dynamic Field - Click on the enter custom value, and select Power BI data Resource Group which is the column we added to this visual. (This will return the selected capacities data, and since more than one capacity might selected, it will be automatically added to the loop when it is added.

Resource Provider: Microsoft.Fabric

Short Resource Id: type "capacities/" and then click on the enter custom value, and select Power BI data Capacity Name which is the column we added to this visual.

Client Api Version: 2023-11-01

The output of the above action will show the current state of each capacity, and if the capacity is already active, we should ignore it and resume only the paused capacities. so, we need to add a condition to check the state of each capacity before taking any action. Click on new step, add condition action and set the condition as below: Choose a value: Click on the add expression and use this:

outputs('Read_a_resource')?['body/properties'].stateand set the condition to is equal to Active as below:

If the answer is Yes, then we need to ignor it, so keep If Yes blank. In If No section of the condition, add Invoke resource operation action from Azure Resource Manager group. With this action you can Invoke an operation on an Azure resource like pause and resume.

Set this action exactly same as previous action and add resume into the Action name field and leave the Body field blank.

Now, the state of the capacity has changed. To ensure it is done without any issue, we need to check the status by adding another Read a Resource action to the flow after the Invoke resource operation and inside the If No section of the condition.

Set this same as previous read a resource action above and then we can save the new data into the dataset and update the log and show the new log on the Power BI visual. Add the new action Add rows to a dataset to the flow in the If No section of the condition, after the read a resource 2. Select the workspace, dataset, and table and set the columns below:

Capacity Name: Dynamic value - Name from the second read a resource after the invoke action

SKU: Dynamic value - SKU Name from the second read a resource after the invoke action

State: Add custom expression as below (This time we need to use the data from the second read a resource action inside If No section of the flow):

outputs('Read_a_resource_2')?['body/properties'].stateDateTime: You can select Timestamp as a dynamic value from Power BI trigger but that will be in UTC time, or write an expression like this to convert it to local (Australia/Sydney):

convertFromUtc(triggerOutputs()['headers']['x-ms-user-timestamp'],'AUS Eastern Standard Time')Resource Group: Select Dynamic Field - Click on the enter custom value, and select Power BI data Resource Group which is the column we added to this visual.

Name your flow, click Save and Apply, and return to the Power BI report. The new button to resume a single capacity, multiple selected capacities, or all capacities has been added to the report. You can select your paused capacities and click on the button to run the flow and test it.

Pause Flow and Button:

This is same as Resume flow with few changes. Open the Power Automate in your internet browser, create a save as from the resume flow we created before this section and set the name to Pause Capacities. No need to go to Power BI, open the new flow in the browser and make that active and click on Edit.

The only changes you need to do:

Move all actions from If No section of the condition to the If Yes section. This means pause the capacity when it is active, otherwise ignore it

Change the Action Name field in Invoke resource operation from resume to suspend

And your flow is ready for pausing selected capacities. Return to the Power BI report, Add a new Power Automate visual, add Capacity Name and Resource Group columns to the Power Automate Data same as before. Click on ... and select Edit, this time no need to create a new flow because we already created that, find th enew flow in the list and click on ✓ button to apply it to the visual and then return to the report. Your pausing button is ready!

Create a button and Flow to dynamically scale up or down selected Fabric Capacities

For this new button to scale up or down a single capacity, multiple selected capacities, or all capacities, a new table with list of the Microsoft Fabric SKU needs to be added to the Power BI report. You can find the list here: https://learn.microsoft.com/en-us/fabric/enterprise/licenses#capacity-license

Copy the table, in Power BI report click on Enter data, and paste the data there. remove the trial line from the table, name it as SKU table and load it. Before adding this table, your connection to the streaming dataset was live and it will ask you to change it to Direct Query which is fine.

Once the new table loaded, make sure there is no relationships between tables. Add a slicer with single selection option to the report using the SKU column from the new SKU table.

Write a measure using this DAX expression:

SKU Selected = SELECTEDVALUE( 'SKU Table'[SKU] )Add a new Power Automate visual to the report, add the above measure, Capacity Name and Resource Group columns to the visual. Click on ... and select Edit. In the Power Automate page, click on the new and select Instant cloud flow.

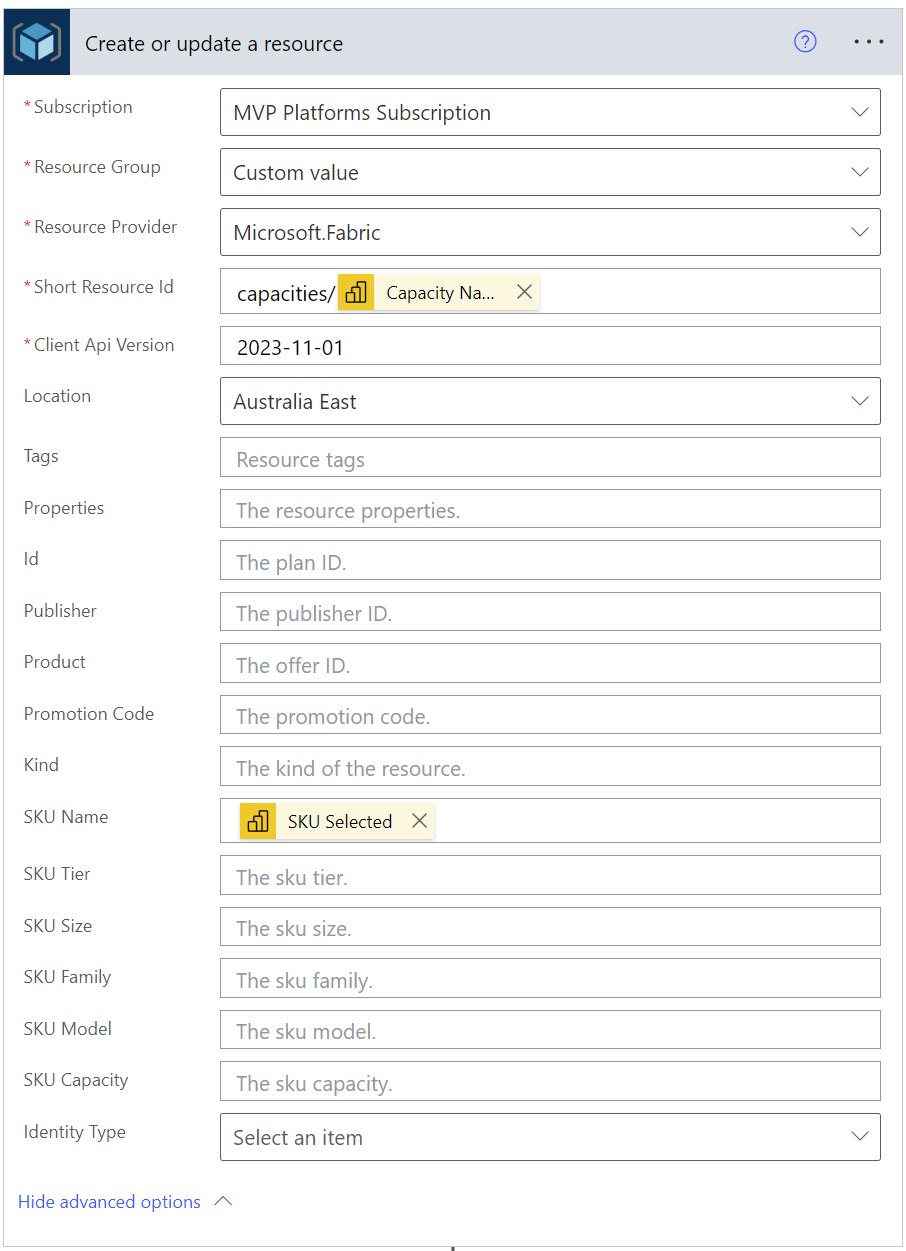

Add Create or update a resource action from the Azure Resource Manager group to the flow and set that as below:

Subscription: Same as before, select from the dropdown

Resource Group: Select Dynamic Field - Click on the enter custom value, and select Power BI data Resource Group which is the column we added to this visual. (This will return the selected capacities data, and since more than one capacity might selected, it will be automatically added to the loop when it is added.

Resource Provider: Microsoft.Fabric

Short Resource Id: type "capacities/" and then click on the enter custom value, and select Power BI data Capacity Name which is the column we added to this visual.

Client Api Version: 2023-11-01

Location: Select a location from the drop down - make sure to select the correct location because the price is different - You can even use this method to change the location as well.

Click on show advanced options.

SKU Name: Click on the enter custom value, and select Power BI data SKU Selected which is the measure we added to this visual.

Click on the add action and from Azure Resource Manager, select the Read a Resource which will return the new information after changing the SKU and set that as below:

Subscription: Select the same subscription same as previous section.

Resource Group: Select Dynamic Field - Click on the enter custom value, and select Power BI data Resource Group which is the column we added to this visual.

Resource Provider: Microsoft.Fabric

Short Resource Id: type "capacities/" and then click on the enter custom value, and select Power BI data Capacity Name which is the column we added to this visual.

Client Api Version: 2023-11-01

Now the scale process is completed and we have the new information about capacities, so we need to save the new records into the Streaming Dataset.

Add the new action Add rows to a dataset to the flow. Select the workspace, dataset, and table and set the columns below:

Capacity Name: Dynamic value - Name from the second read a resource after the invoke action or click on the enter custom value, and select Power BI data Capacity Name which is the column we added to this visual (Each of them will work).

SKU: Dynamic value - SKU Name from the read a resource

State: Add custom expression as below:

outputs('Read_a_resource')?['body/properties'].stateDateTime: You can select Timstamp as a dynamic value from Power BI trigger but that will be in UTC time, or write an expression like this to convert it to local (Australia/Sydney):

convertFromUtc(triggerOutputs()['headers']['x-ms-user-timestamp'],'AUS Eastern Standard Time')Resource Group: Select Dynamic Field - Click on the enter custom value, and select Power BI data Resource Group which is the column we added to this visual.

Your Flow is ready, click on save and apply and back to the report. The button is created but before clicking on the button please pay attention to this important note:

You can change the SKU and scale up or down active capacities with this method. Please note that it cannot change the SKU for paused capacities. Ensure the capacities you selected are active before clicking the scale button. If not, click the Resume button first to activate them.

Click the scale button and wait a few seconds. Once the process is complete, new records will be added to the dataset. Refresh the visuals to see the updated status.

With this method, the SKU for selected capacities regardless of their currect SKU will change to the chosen SKU. This allows for scaling up one capacity while scaling down another.

Conclusion:

Using this method, you can control all your Fabric capacities in one centralized panel within Fabric through a Power BI report. This method simplifies your admin tasks and helps you save money by allowing you to pause capacities or scale them up and down as needed. As this flows are a little big complicated, I highly recommend to watch the videos as well. If you have any comments or ideas, please share them in the comment section.

Comments